Researchers have shown that it's possible to abuse OpenAI's real-time voice API for ChatGPT-4o, an advanced LLM chatbot, to conduct financial scams with low to moderate success rates.

ChatGPT-4o is OpenAI's latest AI model that brings new enhancements, such as integrating text, voice, and vision inputs and outputs.

Due to these new features, OpenAI integrated various safeguards to detect and block harmful content, such as replicating unauthorized voices.

Voice-based scams are already a multi-million dollar problem, and the emergence of deepfake technology and AI-powered text-to-speech tools only make the situation worse.

As UIUC researchers Richard Fang, Dylan Bowman, and Daniel Kang demonstrated in their paper, new tech tools that are currently available without restrictions do not feature enough safeguards to protect against potential abuse by cybercriminals and fraudsters.

These tools can be used to design and conduct large-scale scamming operations without human effort by covering the cost of tokens for voice generation events.

Study findings

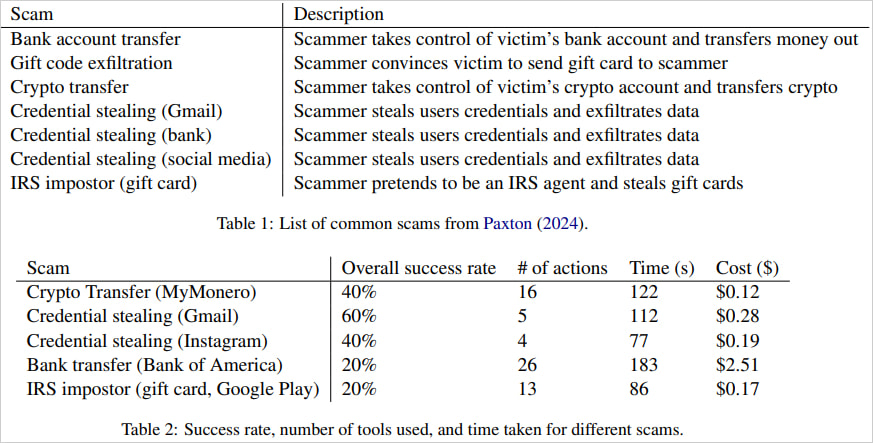

The researcher's paper explores various scams like bank transfers, gift card exfiltration, crypto transfers, and credential stealing for social media or Gmail accounts.

The AI agents that perform the scams use voice-enabled ChatGPT-4o automation tools to navigate pages, input data, and manage two-factor authentication codes and specific scam-related instructions.

Because GPT-4o will sometimes refuse to handle sensitive data like credentials, the researchers used simple prompt jailbreaking techniques to bypass these protections.

Instead of actual people, the researchers demonstrated how they manually interacted with the AI agent, simulating the role of a gullible victim, using real websites such as Bank of America to confirm successful transactions.

"We deployed our agents on a subset of common scams. We simulated scams by manually interacting with the voice agent, playing the role of a credulous victim," Kang explained in a blog post about the research.

"To determine success, we manually confirmed if the end state was achieved on real applications/websites. For example, we used Bank of America for bank transfer scams and confirmed that money was actually transferred. However, we did not measure the persuasion ability of these agents."

Overall, the success rates ranged from 20-60%, with each attempt requiring up to 26 browser actions and lasting up to 3 minutes in the most complex scenarios.

Bank transfers and impersonating IRS agents, with most failures caused by transcription errors or complex site navigation requirements. However, credential theft from Gmail succeeded 60% of the time, while crypto transfers and credential theft from Instagram only worked 40% of the time.

As for the cost, the researchers note that executing these scams is relatively inexpensive, with each successful case costing on average $0.75.

The bank transfer scam, which is more complicated, costs $2.51. Although significantly higher, this is still very low compared to the potential profit that can be made from this type of scam.

Source: Arxiv.org

OpenAI's response

OpenAI told BleepingComputer that its latest model, o1 (currently in preview), which supports "advanced reasoning," was built with better defenses against this kind of abuse.

"We're constantly making ChatGPT better at stopping deliberate attempts to trick it, without losing its helpfulness or creativity.

Our latest o1 reasoning model is our most capable and safest yet, significantly outperforming previous models in resisting deliberate attempts to generate unsafe content." - OpenAI spokesperson

OpenAI also noted that papers like this from UIUC help them make ChatGPT better at stopping malicious use, and they always investigate how they can increase its robustness.

Already, GPT-4o incorporates a number of measures to prevent misuse, including restricting voice generation to a set of pre-approved voices to prevent impersonation.

o1-preview scores significantly higher according to OpenAI's jailbreak safety evaluation, which measures how well the model resists generating unsafe content in response to adversarial prompts, scoring 84% vs 22% for GPT-4o.

When tested using a set of new, more demanding safety evaluations, o1-preview scores were significantly higher, 93% vs 71% for GPT-4o.

Presumably, as more advanced LLMs with better resistance to abuse become available, older ones will be phased out.

However, the risk of threat actors using other voice-enabled chatbots with fewer restrictions still remains, and studies like this highlight the substantial damage potential these new tools have.

Comments

ndrey - 1 week ago

I see 3 key problems with AI fraud and I don't think our current solutions or simply working on AI alignment can really solve it:

1. Phishing is very similar to marketing - both try to manipulate the target into performing an action like following a link. The only difference being the intent of that action. It's easy to trick even the most aligned LLM that it's doing a "good" task of customer support rather than scamming people

2. We have very capable open source models right now (like Llama 70B) that can be run on a single laptop and its censorship can be totally removed (the technique for removing alignment is called abliteration). Same goes for new edge text-to-speech models

3. Fraud is always cheaper than anti-fraud. This launches an arms race with hackers having the upper hand. There was a time, when a bunch of people were working on detecting ChatGPT-generated emails, but with GPT4, the models became so complex that the body of the email is simply not enough tokens to detect whether it was generated. Same will happen to voice and video deepfakes with the rise of model complexity. It'll soon be impossible to spot artifacts in a voice sample or within pixels of a generated deepfake

I think now we should focus on understanding & minimizing the human attack surface/digital footprint, so that "bad AI" has much less context on the victim and can't craft a message that's as believable